Z-Image is a beginner-friendly AI model that turns your text prompts into high-quality images. It can create realistic photos, anime characters, product shots, and large, detailed scenes with very little setup. The model is fast, stable, and works across multiple platforms—HuggingFace, FAL.ai, and ComfyUI—so you can choose the workflow that fits your skill level.

What Is Z-Image

If you’re wondering “what is Z-Image.ai” or how to start, think of it as a powerful, easy tool to help you quickly generate images with Z-Image AI models using simple descriptions.

Core Technology Behind Z-Image

Z-Image is built on a turbo-level inference architecture, which is what makes it feel fast and responsive even for beginners. This engine processes prompts in near real time, so most images finish in just a few seconds.

The model also supports high-resolution generation, allowing clear textures, sharp edges, and detailed scenes without extra plugins. Its architecture is fully compatible with HuggingFace, FAL.ai, and ComfyUI, so you can use the same model across different tools.

Another key feature is its enhanced multimodal prompt understanding. This helps Z-Image interpret your text more accurately—meaning better poses, cleaner lighting, and fewer weird artifacts. It also improves multi-image consistency, letting you keep the same character or object across a series of images.

What Can Z-Image Generate?

Z-Image covers all your core image needs with simple prompts—no pro skills required.

-

High-quality realistic images: Photography-level shots with crisp textures, balanced lighting, and natural details (e.g., portraits, landscapes).

-

Anime/stylized characters: Custom designs from chibi to cyberpunk—captures your desired vibe without complicated tweaks.

-

Product renderings: Clean, professional shots for e-commerce, marketing, or packaging (highlights details like fabric or metal finishes).

-

Large, detailed scenes: Immersive visuals (fantasy cities, mountain valleys) with coherent elements from foreground to background.

-

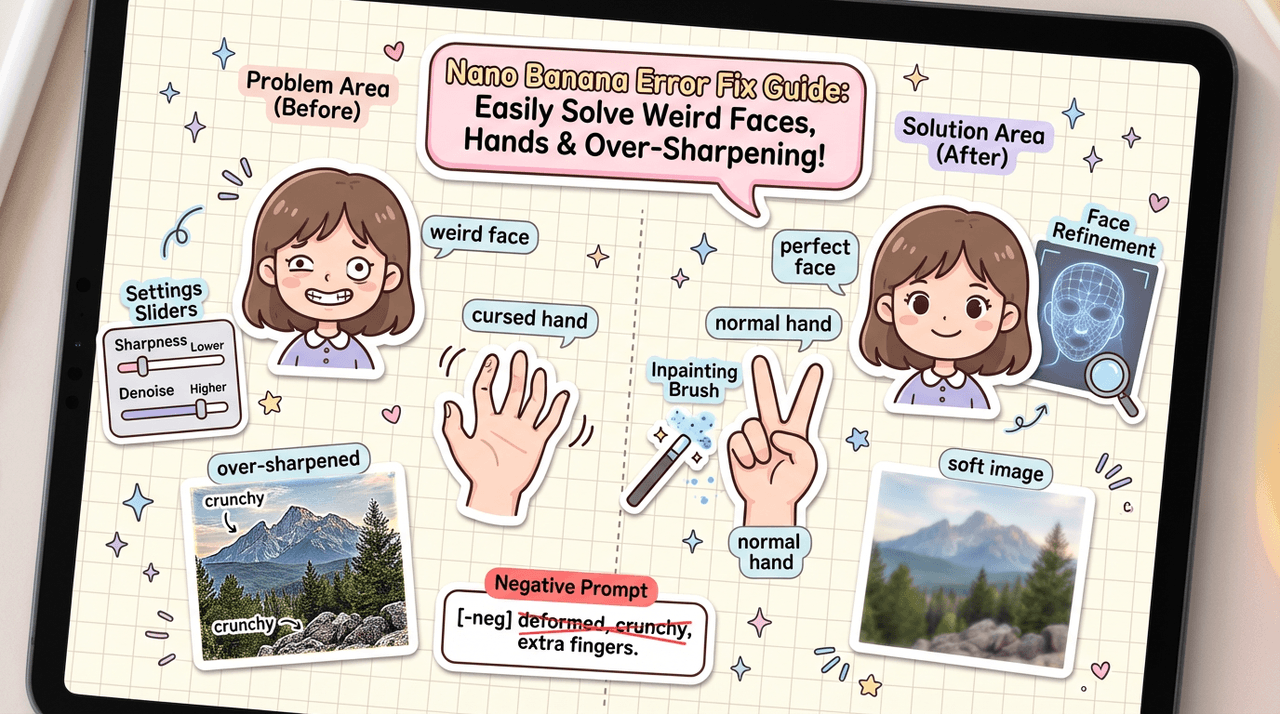

Image repair & inpainting/outpainting: Fix blurs, remove unwanted objects, fill gaps, or expand scenes—all with text prompts.

-

Multi-image consistency: Keep the same character, object, or style across a series (perfect for storyboards or product variants).

Why Z-Image Outperforms Alternatives

Z-Image stands out from other AI image models not just for its ease of use, but for solving the pain points that often frustrate beginners and creators alike—speed, accessibility, and consistency. Below’s why it outshines alternatives.

1. Ultra-Fast Inference

Speed is where Z-Image truly pulls ahead—no more waiting minutes for a single image. Its built-in turbo engine prioritizes quick, smooth results without sacrificing quality, making it perfect for fast workflows or batch projects.

It delivers most images in seconds, not minutes—even complex scenes or high-resolution shots finish faster than many competitors. Low latency means you can iterate on ideas quickly (tweak a prompt and get a new result right away) or tackle batch generation (e.g., 10+ product variants) without delays.

Whether you’re a beginner testing prompts or a creator cranking out content, Z-Image’s speed keeps your workflow moving—no downtime, no frustration.

2. Superior Image Quality

Z-Image blends speed with polished, professional-grade quality—no tradeoffs for beginners.

It delivers sharper details (crisp textures, clear edges, fine lines) across realistic and stylized visuals, from product shots to character designs. Unlike many models, it has fewer artifacts and distortions—no misshapen features, warped backgrounds, or odd color blots.

Two flexible modes fit different needs:

-

Fidelity mode: Prioritizes prompt accuracy (ideal for realistic shots like portraits or e-commerce products).

-

High-resolution mode: Boosts pixel count for large uses (posters, headers) without losing clarity.

Recommended Resolution Settings (Beginner-Friendly)

-

Social media (Instagram/TikTok): 1024x1024 (square) or 1080x1920 (9:16)

-

E-commerce product shots: 1280x1280 (great for zoomed-in views)

-

Posters/headers: 2048x1080 (widescreen) or 2048x2048 (large square)

-

Storyboards/character sheets: 1024x768 (4:3, easy to batch-generate)

No complicated tweaks—just pick the size that fits your project, and Z-Image keeps details sharp and colors true.

3. Multi-Platform Flexibility

Z-Image fits your workflow—not the other way around. It works seamlessly across three popular platforms, so beginners, developers, and creators all get a setup that clicks.

Each platform is tailored to different needs, with zero learning curve for getting started:

-

HuggingFace: Perfect for developers or anyone wanting local deployment. Grab pre-trained weights, run it on your own device, or tweak code for custom use cases.

-

FAL.ai: Ideal for fast, cloud-based API generation. Integrate Z-Image into apps, tools, or batch workflows without managing local hardware.

-

ComfyUI: For creators who want workflow customization. Drag-and-drop modules to fine-tune steps (e.g., add filters, adjust prompts) without coding.

No matter which you choose, Z-Image keeps performance consistent—so you can switch platforms without re-learning how to generate great images.

4. Stable Parameter Control

Z-Image makes fine-tuning easy—even for beginners—with intuitive parameters that balance consistency and creativity, no technical expertise required.

Key controls that simplify your workflow:

-

Seed control: Lock a seed to replicate the same image or tweak prompts while keeping core elements consistent (perfect for refining ideas).

-

Strength/denoise settings: Adjust how much Z-Image modifies existing images (for edits/inpainting) or cleans up generated visuals—lower values = more original, higher = more creative.

-

Guidance scale: Balance creativity vs. realism (1–5 = free-flowing, 6–10 = strict adherence to prompts; ideal for product shots needing accuracy or art needing flair).

How to Use Z-Image

Z-Image’s multi-platform support means getting started is straightforward—pick your platform, follow a few steps, and start generating. We’ll break down the most common setups first.

1. Using Z-Image on HuggingFace

HuggingFace is the go-to for local deployment (run Z-Image on your own device) or custom tweaks. Even if you’re new to coding, the setup is simple.

Follow these 3 core steps to get Z-Image running locally—we’ve included copy-paste code for zero guesswork.

2. Using Z-Image via FAL.ai

FAL.ai is the fastest way to use Z-Image without local setup—no GPU, no coding expertise required. It’s cloud-powered, so you can generate images via API or integrate it into your apps in minutes. The FAL.ai API workflow is beginner-friendly, with clear steps and no hidden hoops. Here’s how to get started:

Step 1: Create a FAL.ai Project

-

Go to FAL.ai’s official site and sign up (free tier available).

-

Click “Create Project” → Name your project (e.g., “Z-Image-Generation”) → Select “Z-Image” from the model library.

-

Confirm the project—you’ll be directed to your project dashboard.

Step 2: Get Your API Key

-

In your project dashboard, navigate to “Settings” → “API Keys”.

-

Click “Generate New Key” → Name it (e.g., “Z-Image-Access”) → Copy the key (save it somewhere safe—you’ll only see it once!).

Step 3: Use the API Endpoint

FAL.ai provides a ready-to-use endpoint for Z-Image. You can test it with tools like Postman, curl, or even simple Python code (no advanced coding needed).

3. Using Z-Image in ComfyUI

ComfyUI is perfect for creators who want to customize image generation (e.g., add filters, tweak steps) with a drag-and-drop interface—no coding needed. Setting up Z-Image here is straightforward, even for beginners. Follow these 4 simple steps to get Z-Image running in ComfyUI quickly.

Step 1: Prep ComfyUI (If Not Already Installed)

-

Download ComfyUI from its official GitHub (supports Windows/macOS/Linux).

-

Ensure you have Python 3.10+ and a GPU with 16GB+ VRAM (for smooth performance—CPU works but is slower).

Step 2: Get Z-Image Files

-

Workflow File: Grab the pre-built Z-Image ComfyUI workflow (JSON) from Z-Image’s Hugging Face repo (look for “ComfyUI_Z-Image_Workflow.json” in the “Files” tab).

-

Model Weights: Download the Z-Image checkpoint (e.g., z-image-v1.safetensors) from the same repo.

Step 3: Place Files in Correct Folders

-

Open your ComfyUI folder → Navigate to models/checkpoints/ → Paste the Z-Image model weights here.

-

No extra setup for the workflow file—you’ll load it directly in ComfyUI next.

Step 4: Load & Run the Workflow

-

Launch ComfyUI (run ComfyUI_windows_portable.exe on Windows, or the equivalent script for macOS/Linux).

-

In the ComfyUI interface: Click Load (top-right) → Select the downloaded Z-Image workflow JSON.

-

The workflow loads pre-connected—all nodes (prompt input, model, sampler, output) are ready to use.

-

Type your prompt (e.g., “photorealistic sunset landscape”) → Click Queue (top-right) to generate!

Why Choose Z-Image?

Z-Image stands out as the go-to AI image model for beginners and pros alike. It combines speed, quality, and flexibility without the learning curve or hardware barriers of competitors.

It stands out because:

-

Faster than rivals: Generates images in seconds with turbo inference, outpacing SDXL and PixArt-Alpha.

-

Pro-level quality: Sharp details, minimal artifacts, and consistent results across styles (realistic, anime, product).

-

Multi-platform ease: Works on HuggingFace (local), FAL.ai (API), and ComfyUI (custom workflows)—fit any setup.

-

For all users: Creators, designers, and developers alike benefit from its intuitive controls and reliable performance.

If you want an AI model that’s fast, easy to use, and delivers top-tier results, Z-Image is the choice.

FAQs

Q1: Do I need a powerful GPU to use Z-Image?

No! Z-Image is optimized for accessibility. A consumer GPU with 16GB+ VRAM (e.g., RTX 3060/4060) works smoothly for local deployment (HuggingFace/ComfyUI). If you don’t have a GPU, use FAL.ai’s cloud API—no hardware required.

Q2: Can Z-Image generate text in images (e.g., logos, slogans)?

Yes! It supports bilingual text rendering (English/Chinese) with crisp, readable results—unlike many competitors (e.g., Stable Diffusion XL, MidJourney) that struggle with text. For best results, include text details in your prompt (e.g., “a logo with the text ‘EcoBoost’ in bold sans-serif font”).

Q3: Is Z-Image free to use for commercial projects?

Yes! Z-Image is open-source and free for commercial use (check the official license for details). You can use generated images for marketing, e-commerce, apps, or other business needs without paying fees.

Q4: How do I fix blurry or inconsistent results?

Stick to the prompt structure (Subject + Style + Composition + Lighting) and use the scenario-based parameter presets (e.g., guidance scale 8–9 for product shots). If images are blurry, increase the resolution (e.g., 1280x1280) or add “sharp textures”/“4K” to your prompt. For consistency, lock the seed value.

Q5: Can I integrate Z-Image into my own app or tool?

Absolutely! Use FAL.ai’s ready-to-use API for quick integration—no complex setup. For custom builds, HuggingFace provides Python libraries to embed Z-Image directly into your code. Both options support batch generation and parameter customization (e.g., resolution, style).